IEEE Honors Pioneering Technical Achievements

[ad_1]

OpenAI has not released the technology to the public, to commercial entities, or even to the AI community at large. “We share people’s concerns about misuse, and it’s something that we take really seriously,” OpenAI researcher

Mark Chen tells IEEE Spectrum.But the company did invite select people to experiment with DALL-E 2 and allowed them to share their results with the world. That policy of limited public testing stands in contrast to Google’s policy with its own just-released text-to-image generator, Imagen. When unveiling the system, Google announced that it would not be releasing code or a public demo due to risks of misuse and generation of harmful images. Google has released a handful of very impressive images but hasn’t shown the world any of the problematic content to which it had alluded.

That makes the images that have come out from the early DALL-E 2 experimenters more interesting than ever. The results that have emerged over the last few months say a lot about the limits of today’s deep-learning technology, giving us a window into what AI understands about the human world—and what it totally doesn’t get.

OpenAI kindly agreed to run some text prompts from

Spectrum through the system. The resulting images are scattered through this article.

Spectrum asked for “a Picasso-style painting of a parrot flipping pancakes,” and DALL-E 2 served it up.

Spectrum asked for “a Picasso-style painting of a parrot flipping pancakes,” and DALL-E 2 served it up.

OpenAI

How DALL-E 2 Works

DALL-E 2 was trained on approximately 650 million image-text pairs scraped from the Internet, according to

the paper that OpenAI posted to ArXiv. From that massive data set it learned the relationships between images and the words used to describe them. OpenAI filtered the data set before training to remove images that contained obvious violent, sexual, or hateful content. “The model isn’t exposed to these concepts,” says Chen, “so the likelihood of it generating things it hasn’t seen is very, very low.” But the researchers have clearly stated that such filtering has its limits and have noted that DALL-E 2 still has the potential to generate harmful material.

Once this “encoder” model was trained to understand the relationships between text and images, OpenAI paired it with a decoder that generates images from text prompts using a process called diffusion, which begins with a random pattern of dots and slowly alters the pattern to create an image. Again, the company integrated certain filters to keep generated images in line with its

content policy and has pledged to keep updating those filters. Prompts that seem likely to produce forbidden content are blocked and, in an attempt to prevent deepfakes, it can’t exactly reproduce faces it has seen during its training. Thus far, OpenAI has also used human reviewers to check images that have been flagged as possibly problematic.

What Industries DALL-E 2 Could Disrupt

Because of DALL-E 2’s clear potential for misuse, OpenAI initially granted access to only a few hundred people, mostly AI researchers and artists. Unlike the lab’s language-generating model,

GPT-3, DALL-E 2 has not been made available for even limited commercial use, and OpenAI hasn’t publicly discussed a timetable for doing so. But from browsing the images that DALL-E 2 users have created and posted on forums such as Reddit, it does seem like some professions should be worried. For example, DALL-E 2 excels at food photography, at the type of stock photos used for corporate brochures and websites, and with illustrations that wouldn’t seem out of place on a dorm room poster or a magazine cover.

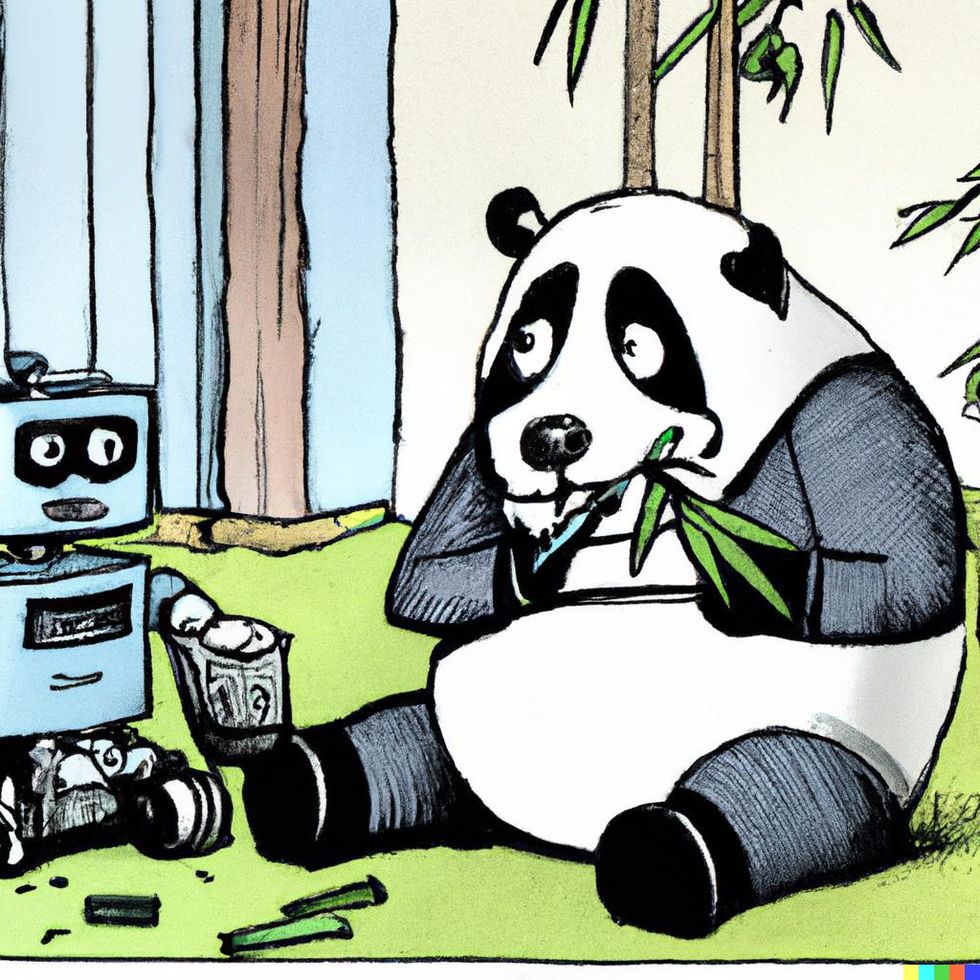

Spectrum asked for a “New Yorker-style cartoon of an unemployed panda realizing her job eating bamboo has been taken by a robot.” OpenAI

Spectrum asked for a “New Yorker-style cartoon of an unemployed panda realizing her job eating bamboo has been taken by a robot.” OpenAI

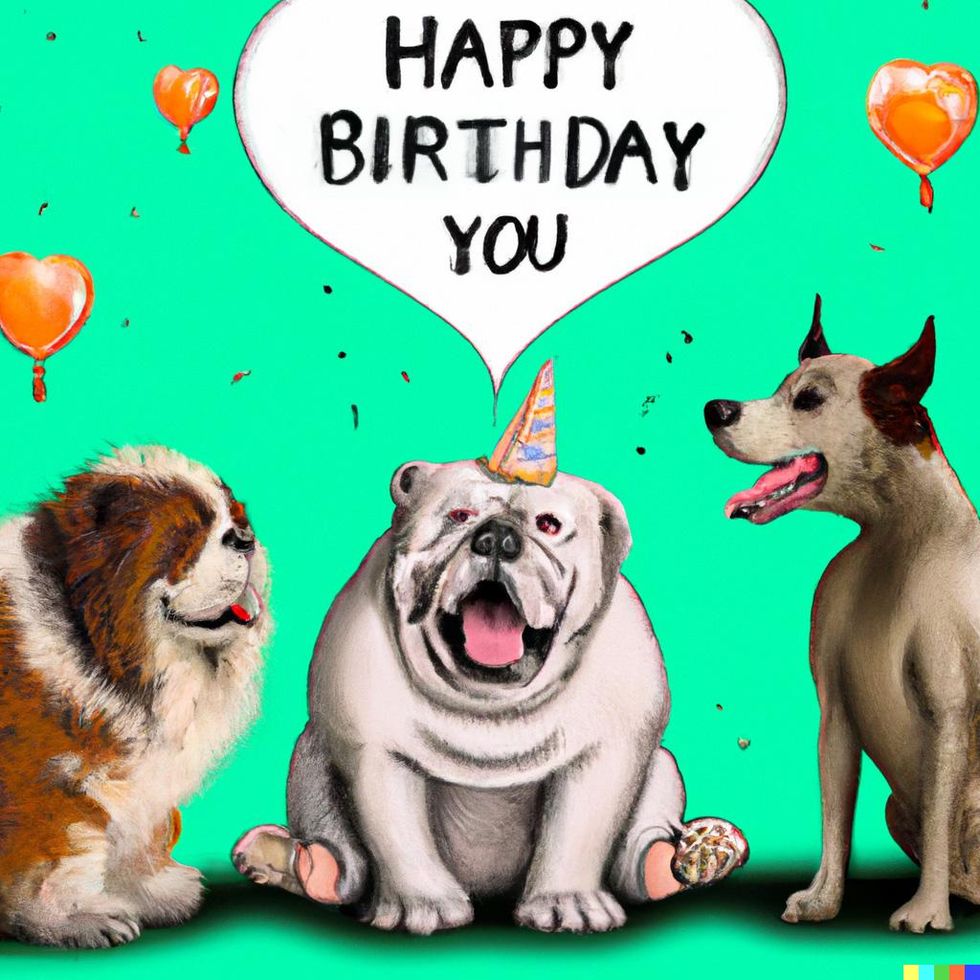

Here’s DALL-E 2’s response to the prompt: “An overweight old dog looks delighted that his younger and healthier dog friends have remembered his birthday, in the style of a greeting card.”OpenAI

Here’s DALL-E 2’s response to the prompt: “An overweight old dog looks delighted that his younger and healthier dog friends have remembered his birthday, in the style of a greeting card.”OpenAI

Spectrum reached out to a few entities within these threatened industries. A spokesperson for Getty Images, a leading supplier of stock photos, said the company isn’t worried. “Technologies such a DALL-E are no more a threat to our business than the two-decade reality of billions of cellphone cameras and the resulting trillions of images,” the spokesperson said. What’s more, the spokesperson said, before models such as DALL-E 2 can be used commercially, there are big questions to be answered about their use for generating deepfakes, the societal biases inherent in the generated images, and “the rights to the imagery and the people, places, and objects within the imagery that these models were trained on.” The last part of that sounds like a lawsuit brewing.

Rachel Hill, CEO of the

Association of Illustrators, also brought up the issues of copyright and compensation for images’ use in training data. Hill admits that “AI platforms may attract art directors who want to reach for a fast and potentially lower-price illustration, particularly if they are not looking for something of exceptional quality.” But she still sees a strong human advantage: She notes that human illustrators help clients generate initial concepts, not just the final images, and that their work often relies “on human experience to communicate an emotion or opinion and connect with its viewer.” It remains to be seen, says Hill, whether DALL-E 2 and its equivalents could do the same, particularly when it comes to generating images that fit well with a narrative or match the tone of an article about current events.

To gauge its ability to replicate the kinds of stock photos used in corporate communications, Spectrum asked for “a multiethnic group of blindfolded coworkers touching an elephant.”OpenAI

To gauge its ability to replicate the kinds of stock photos used in corporate communications, Spectrum asked for “a multiethnic group of blindfolded coworkers touching an elephant.”OpenAI

Where DALL-E 2 Fails

For all DALL-E 2’s strengths, the images that have emerged from eager experimenters show that it still has a lot to learn about the world. Here are three of its most obvious and interesting bugs.

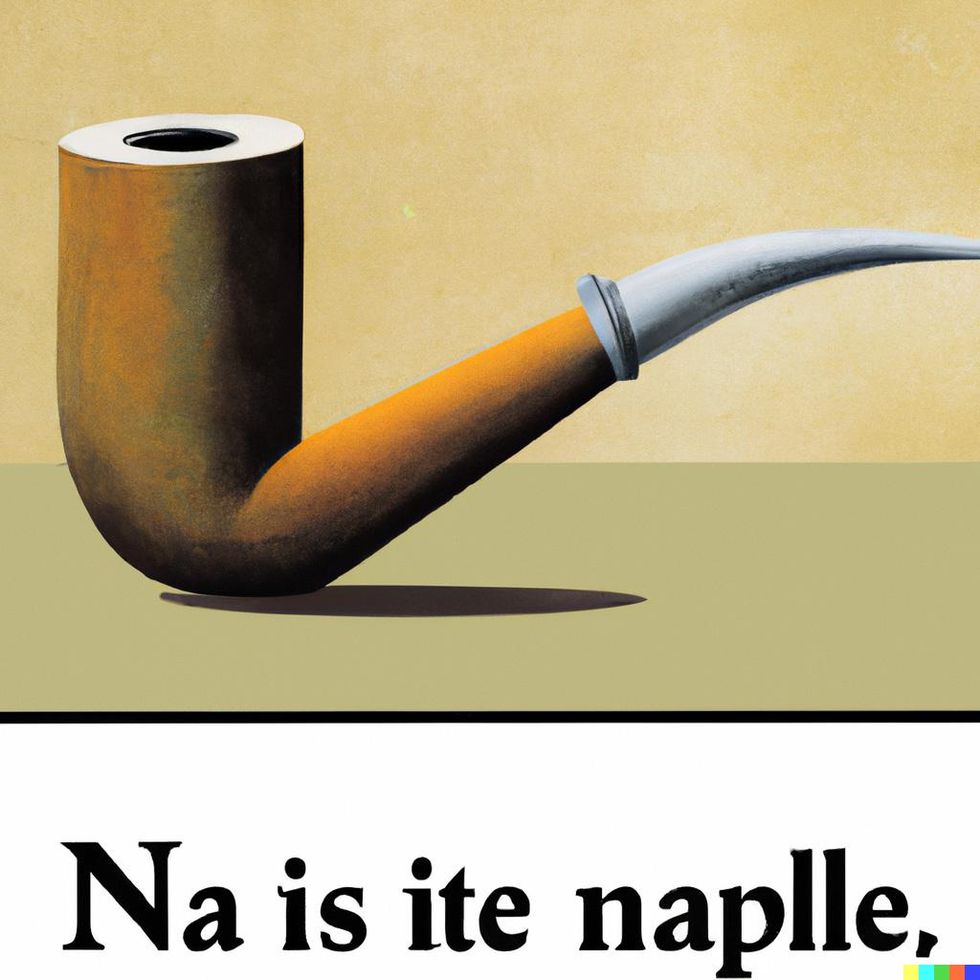

Text: It’s ironic that DALL-E 2 struggles to place comprehensible text in its images, given that it’s so adept at making sense of the text prompts that it uses to generate images. But users have discovered that asking for any kind of text usually results in a mishmash of letters. The AI blogger Janelle Shane had fun asking the system to create corporate logos and observing the resulting mess. It seems likely that a future version will correct this issue, however, particularly since OpenAI has plenty of text-generation expertise with its GPT-3 team. “Eventually a DALL-E successor will be able to spell Waffle House, and I will mourn that day,” Shane tells Spectrum. “I’ll just have to move on to a different method of messing with it.”

To test DALL-E 2’s skills with text, Spectrum riffed on the famous Magritte painting that has the French words “Ceci n’est pas une pipe” below a picture of a pipe. Spectrum asked for the words “This is not a pipe” beneath a picture of a pipe. OpenAI

To test DALL-E 2’s skills with text, Spectrum riffed on the famous Magritte painting that has the French words “Ceci n’est pas une pipe” below a picture of a pipe. Spectrum asked for the words “This is not a pipe” beneath a picture of a pipe. OpenAI

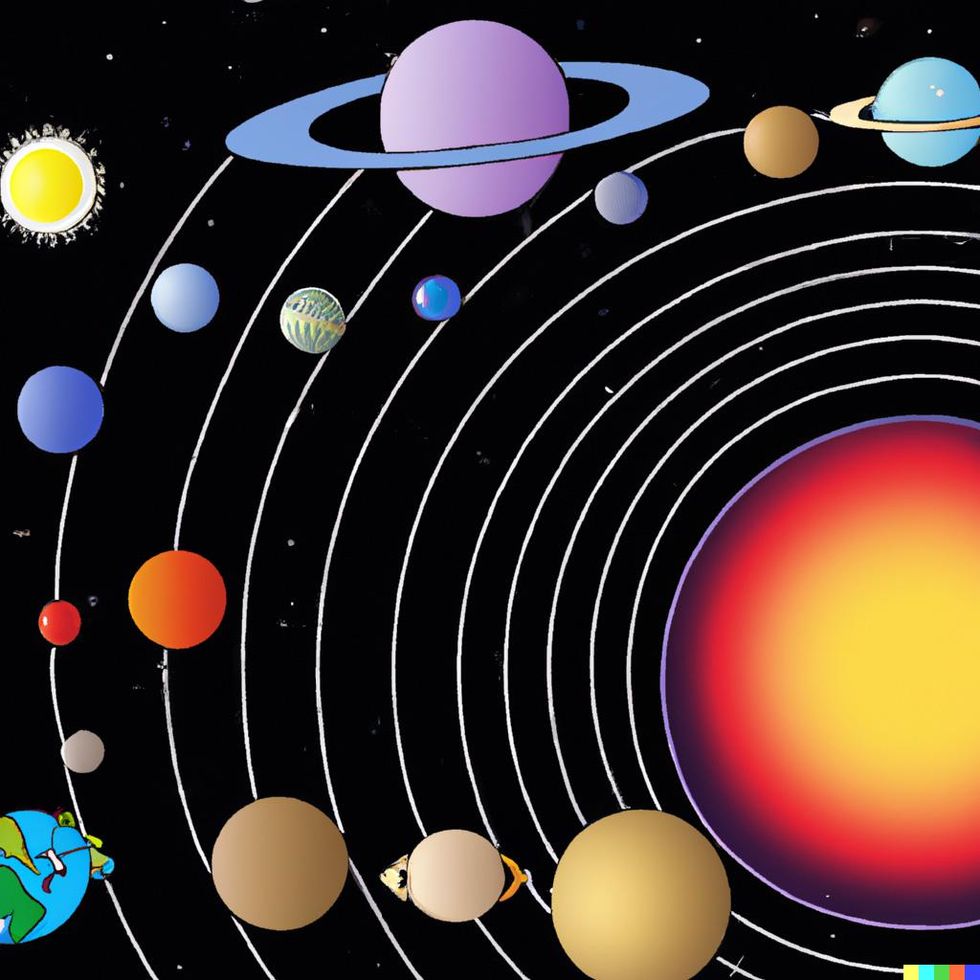

Science: You could argue that DALL-E 2 understands some laws of science, since it can easily depict a dropped object falling or an astronaut floating in space. But asking for an anatomical diagram, an X-ray image, a mathematical proof, or a blueprint yields images that may be superficially right but are fundamentally all wrong. For example, Spectrum asked DALL-E 2 for an “illustration of the solar system, drawn to scale,” and got back some very strange versions of Earth and its far too many presumptive interplanetary neighbors—including our favorite, Planet Hard-Boiled Egg. “DALL-E doesn’t know what science is. It just knows how to read a caption and draw an illustration,” explains OpenAI researcher Aditya Ramesh, “so it tries to make up something that’s visually similar without understanding the meaning.”

Spectrum asked for “an illustration of the solar system, drawn to scale,” and got back a very crowded and strange collection of planets, including a blobby Earth at lower left and something resembling a hard-boiled egg at upper left.OpenAI

Spectrum asked for “an illustration of the solar system, drawn to scale,” and got back a very crowded and strange collection of planets, including a blobby Earth at lower left and something resembling a hard-boiled egg at upper left.OpenAI

Faces: Sometimes, when DALL-E 2 tries to generate photorealistic images of people, the faces are pure nightmare fodder. That’s partly because, during its training, OpenAI introduced some deepfake safeguards to prevent it from memorizing faces that appear often on the Internet. The system also rejects uploaded images if they contain realistic faces of anyone, even nonfamous people. But an additional issue, an OpenAI representative tells Spectrum, is that the system was optimized for images with a single focus of attention. That’s why it’s great at portraits of imaginary people, such as this nuanced portrait produced when Spectrum asked for “an astronaut gazing back at Earth with a wistful expression on her face,” but pretty terrible at group shots and crowd scenes. Just look what happened when Spectrum asked for a picture of seven engineers gathered around a whiteboard.

This image shows DALL-E 2’s skill with portraits. It also shows that the system’s gender bias can be overcome with careful prompts. This image was a response to the prompt “an astronaut gazing back at Earth with a wistful expression on her face.”OpenAI

This image shows DALL-E 2’s skill with portraits. It also shows that the system’s gender bias can be overcome with careful prompts. This image was a response to the prompt “an astronaut gazing back at Earth with a wistful expression on her face.”OpenAI

When DALL-E 2 is asked to generate pictures of more than one human at a time, things fall apart. This image of “seven engineers gathered around a white board” includes some monstrous faces and hands. OpenAI

When DALL-E 2 is asked to generate pictures of more than one human at a time, things fall apart. This image of “seven engineers gathered around a white board” includes some monstrous faces and hands. OpenAI

Bias: We’ll go a little deeper on this important topic. DALL-E 2 is considered a multimodal AI system because it was trained on images and text, and it exhibits a form of multimodal bias. For example, if a user asks it to generate images of a CEO, a builder, or a technology journalist, it will typically return images of men, based on the image-text pairs it saw in its training data.

Spectrum queried DALL-E 2 for an image of “a technology journalist writing an article about a new AI system that can create remarkable and strange images.” This image shows one of its responses; the others are shown at the top of this article. OpenAI

Spectrum queried DALL-E 2 for an image of “a technology journalist writing an article about a new AI system that can create remarkable and strange images.” This image shows one of its responses; the others are shown at the top of this article. OpenAI

OpenAI asked external researchers who work in this area to serve as a “red team” before DALL-E 2’s release, and their insights helped inform OpenAI’s write-up on

the system’s risks and limitations. They found that in addition to replicating societal stereotypes regarding gender, the system also over-represents white people and Western traditions and settings. One red team group, from the lab of Mohit Bansal at the University of North Carolina, Chapel Hill, had previously created a system that evaluated the first DALL-E for bias, called DALL-Eval, and they used it to check the second iteration as well. The group is now investigating the use of such evaluation systems earlier in the training process—perhaps sampling data sets before training and seeking additional images to fix problems of underrepresentation or using bias metrics as a penalty or reward signal to push the image-generating system in the right direction.

Chen notes that a team at OpenAI has already begun experimenting with “machine-learning mitigations” to correct for bias. For example, during DALL-E 2’s training the team found that removing sexual content created a data set with more males than females, which caused the system to generate more images of males. “So we adjusted our training methodology and up-weighted images of females so they’re more likely to be generated,” Chen explains. Users can also help DALL-E 2 generate more diverse results by specifying gender, ethnicity, or geographical location using prompts such as “a female astronaut” or “a wedding in India.”

But critics of OpenAI say the overall trend toward training models on massive uncurated data sets should be questioned.

Vinay Prabhu, an independent researcher who co-authored a 2021 paper about multimodal bias, feels that the AI research community overvalues scaling up models via “engineering brawn” and undervalues innovation. “There is this sense of faux claustrophobia that seems to have consumed the field where Wikipedia-based data sets spanning [about] 30 million image-text pairs are somehow ad hominem declared to be ‘too small’!” he tells Spectrum in an email.

Prabhu champions the idea of creating smaller but “clean” data sets of image-text pairs from such sources as Wikipedia and e-books, including textbooks and manuals. “We could also launch (with the help of agencies like UNESCO for example) a global drive to contribute images with descriptions according to W3C’s

best practices and whatever is recommended by vision-disabled communities,” he suggests.

What’s Next for DALL-E 2

The DALL-E 2 team says they’re eager to see what faults and failures early users discover as they experiment with the system, and they’re already thinking about next steps. “We’re very much interested in improving the general intelligence of the system,” says Ramesh, adding that the team hopes to build “a deeper understanding of language and its relationship to the world into DALL-E.” He notes that OpenAI’s text-generating GPT-3 has a surprisingly good understanding of common sense, science, and human behavior. “One aspirational goal could be to try to connect the knowledge that GPT-3 has to the image domain through DALL-E,” Ramesh says.

As users have worked with DALL-E 2 over the past few months, their initial awe at its capabilities changed fairly quickly to bemusement at its quirks. As one experimenter put it in a

blog post, “Working with DALL-E definitely still feels like attempting to communicate with some kind of alien entity that doesn’t quite reason in the same ontology as humans, even if it theoretically understands the English language.” One day, maybe, OpenAI or its competitors will create something that approximates human artistry. For now, we’ll appreciate the marvels and laughs that come from an alien intelligence—perhaps hailing from Planet Hard-Boiled Egg.

From Your Site Articles

Related Articles Around the Web

[ad_2]

Source link