Superior driver help methods: Cameras or sensor fusion?

[ad_1]

One of many fiercest areas of competitors within the automotive trade right this moment is the sector of superior driver help methods (ADAS) and automatic driving, each of which have the potential to considerably enhance security.

Constructing on these applied sciences, a completely autonomous, Level 5 car may present game-changing financial or productiveness advantages, reminiscent of a fleet of robotic taxis that will take away the necessity to pay wages to drivers, or by permitting workers to work or relaxation from their automotive.

Carmakers are at the moment testing two key approaches to those ADAS and autonomous driving methods, with interim steps manifesting because the driver-assist options we see and use right this moment: AEB, lane-keeping aids, blind-spot alerts, and issues of that be aware.

MORE: How autonomous is my car? Levels of self-driving explained

The primary method depends solely on cameras because the supply of knowledge on which the system will decide. The latter method is named sensor fusion, and goals to mix knowledge from cameras in addition to different sensors reminiscent of lidar, radar and ultrasonic sensors.

Cameras solely

Tesla and Subaru are two well-liked carmakers that depend on cameras for his or her ADAS and different autonomous driving options.

Philosophically the rationale for utilizing cameras solely can maybe be summarised by paraphrasing Tesla CEO Elon Musk, who has famous that there is no such thing as a want for something apart from cameras, when people can drive with out the necessity for something apart from their eyes.

Musk has elaborated additional, by mentioning that having a number of cameras thereby acts like ‘eyes behind one’s head’ with the potential to drive a automotive at a considerably increased stage of security than a median particular person.

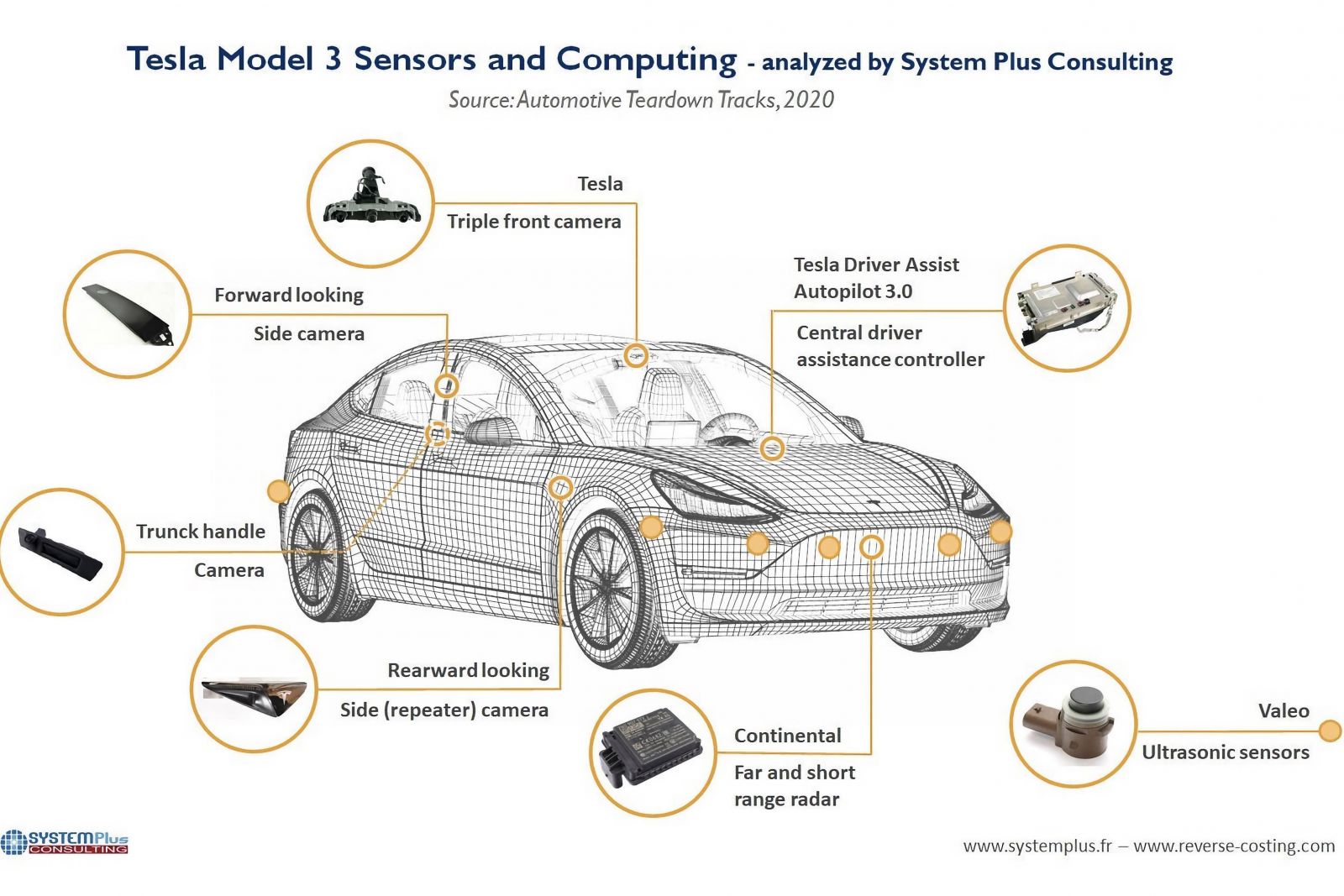

Tesla Model 3 and Model Y autos on sale right this moment correspondingly provide a classy setup consisting of eight outward-facing cameras.

These include three windscreen-mounted ahead going through cameras, every with totally different focal lengths, a pair of ahead wanting aspect cameras mounted on the B-pillar, a pair of rearwards wanting aspect cameras mounted throughout the aspect repeater gentle housing, and the compulsory reverse-view digital camera.

Subaru in the meantime, makes use of a pair of windscreen mounted cameras for many variations of its EyeSight suite of driver help methods, with the most recent EyeSight X technology, as seen within the MY23 Subaru Outback (at the moment revealed for the US however arriving right here quickly), additionally including a 3rd wide-angle greyscale digital camera for a greater discipline of view.

Proponents of those camera-only setups declare that the usage of a number of cameras, every with totally different fields of view and focal lengths, permits for enough depth notion to facilitate applied sciences reminiscent of adaptive cruise management, lane-keep help and different ADAS options.

That is with out having to allocate worthwhile computing assets to deciphering different knowledge inputs, while additionally eradicating the chance of getting conflicting info that will drive the automotive’s on-board computer systems to prioritise knowledge from one kind of sensor over one other.

With radar and different sensors usually mounted behind or throughout the entrance bumper, adopting a camera-only setup additionally has the sensible advantage of decreasing restore payments within the occasion of a collision, as these sensors wouldn’t must be changed.

The clear downside of relying solely on cameras is that their effectiveness could be severely curtailed in poor climate situations reminiscent of heavy rain, fog or snow, or throughout occasions of the day when shiny daylight instantly hits the digital camera lenses. Furthermore, there’s additionally the chance {that a} soiled windscreen would obscure visibility and thereby hamper efficiency.

Nonetheless in a recent presentation, Tesla’s former head of Autopilot Andrej Karpathy claimed that developments in Tesla Imaginative and prescient may successfully mitigate any points attributable to short-term inclement climate.

By utilizing a complicated neural community and methods reminiscent of auto-labelling of objects, Tesla Imaginative and prescient is ready to proceed to recognise objects in entrance of the automotive and predict their path for at the very least brief distances, regardless of the presence of particles or different hazardous climate that will momentarily impede the digital camera view.

If the climate was continually dangerous, nevertheless, the standard or reliability of knowledge obtained from a digital camera is unlikely to be nearly as good as that from a fusion setup that includes knowledge from sensors reminiscent of radar which may be much less affected by dangerous climate.

Furthermore, there’s additionally the chance that solely providing one kind of sensor will scale back the redundancy accessible by having totally different sensor sorts.

Sensor fusion

The overwhelming majority of carmakers, in distinction, have opted to utilize a number of sensors to develop their ADAS and associated autonomous driving methods.

Often called sensor fusion, this entails taking simultaneous knowledge feeds from every of those sensors, after which combining them to supply a dependable and holistic view of the automotive’s present driving setting.

As mentioned above, along with a large number of cameras, the sensors deployed sometimes embrace radar, ultrasonic sensors and in some circumstances, lidar sensors.

Radar (radio detection and ranging) detects objects by emitting radio wave pulses and measuring the time taken for these to be mirrored again.

Consequently, it typically doesn’t provide the identical stage of element that may be offered by lidar or cameras, and with a low decision, is unable to precisely decide the exact form of an object, or distinguish between a number of smaller objects positioned collectively intently.

Nonetheless, it’s unaffected by climate situations reminiscent of rain, fog or mud, and is usually a dependable indicator of whether or not there’s an object in entrance of the automotive.

A lidar (gentle detection and ranging) sensor works on an analogous basic precept to radar, however as an alternative of radio waves, lidar sensors use lasers. These lasers emit gentle pulses, mirrored by any surrounding objects.

Much more so than cameras, a lidar can create a extremely correct 3D map of a automotive’s environment, and is ready to distinguish between pedestrians, animals, and may also monitor the motion and course of those objects with ease.

Nonetheless, like cameras, lidar continues to be affected by climate situations, and stays costly to put in.

Ultrasonic sensors have historically been used within the automotive house as parking sensors, offering the driving force with an audible sign of how shut they’re to different automobiles via a way often called echolocation, as additionally utilized by bats within the pure world.

Efficient at measuring brief distances at low speeds, within the ADAS and autonomous car house, these sensors may permit a automotive to autonomously discover and park itself in an empty spot in a multi-storey carpark, for instance.

The first advantage of adopting a sensor fusion method is the chance to have extra correct, extra dependable knowledge in a wider vary of situations, as various kinds of sensors are in a position to operate extra successfully in several conditions.

This method additionally affords the possibility of better redundancy within the occasion {that a} specific sensor doesn’t operate.

A number of sensors, in fact, additionally means a number of items of {hardware}, and in the end this additionally will increase the price of sensor fusion setups past a comparable camera-only system.

For instance, lidar sensors are sometimes solely accessible in luxurious autos, such because the Drive Pilot system provided on the Mercedes-Benz EQS.

MORE: How autonomous is my car? Levels of self-driving explained

[ad_2]

Source link